Make It Sound Like YOU

The Problem: AI has recognizable patterns and phrases that hiring managers are learning to spot. If your materials sound “AI-generated,” it can work against you.

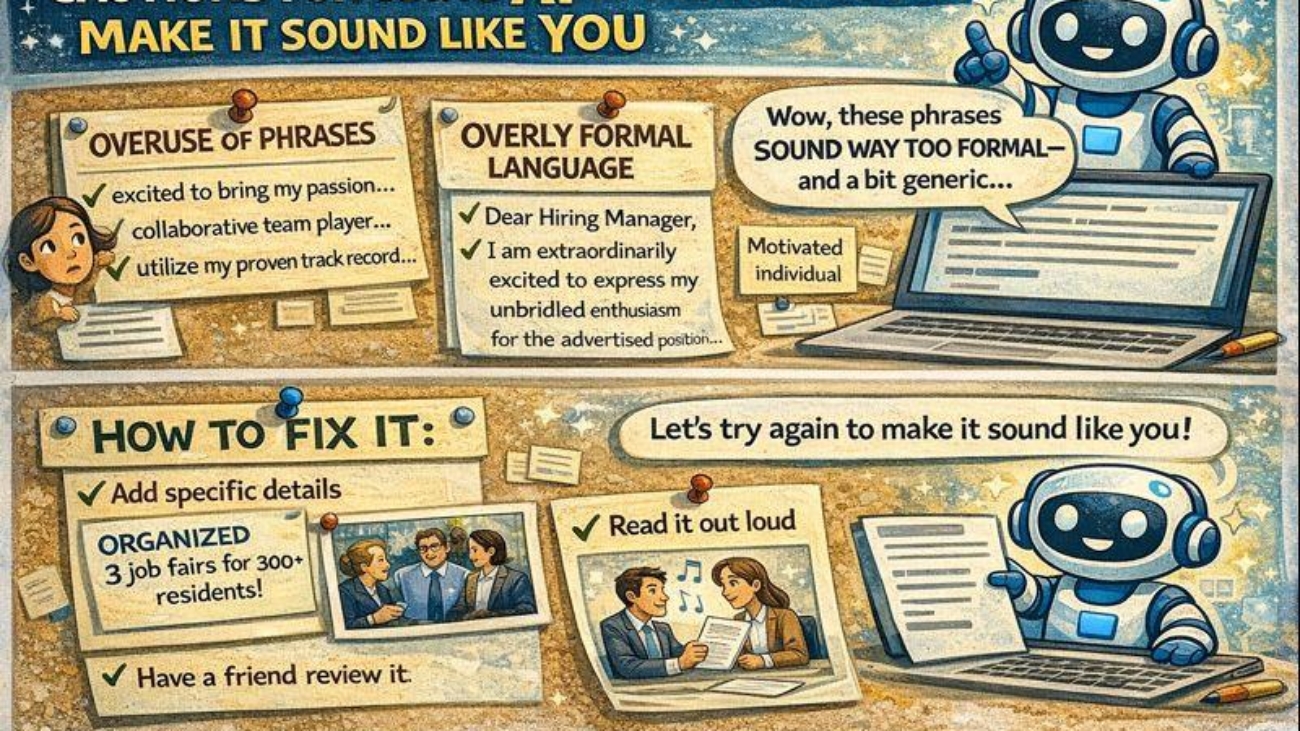

Common AI Tell-Tale Signs:

- Overuse of phrases

- Overly formal or corporate language that doesn’t match how real people talk

- Generic enthusiasm: “I am excited to bring my passion for…”

- Perfectly structured sentences that lack personal voice

- Buzzword-heavy writing without substance

How to Fix It:

- Provide feedback frequently while crafting materials

- Add specific details – Generic: “Led successful projects” → Real: “Coordinated 3 community health fairs that served 200+ residents”

- Inject personality – Your cover letter should sound like you, not a robot

- Read it out loud – Does it sound like something you’d actually say?

- Have a friend read it – Ask: “Does this sound like me?”

🎯 AI Doesn’t Always Know Best Practices

The Reality: AI is trained on massive amounts of text from the internet—including bad examples, outdated advice, and conflicting opinions. It doesn’t inherently know current professional standards unless you tell it to follow them.

Where AI Often Gets It Wrong:

Resumes:

❌ May suggest outdated formats (objective statements, “References available upon request”)

❌ Might create overly long bullet points

❌ Could recommend including personal info that shouldn’t be there (age, photo, marital status)

❌ May not follow ATS-friendly formatting

What to Do:

When asking AI for resume help, add this to your prompt:

"Please follow current best practices for [your field] resumes, including:

- Action verbs at the start of bullets

- Quantifiable achievements where possible

- ATS-friendly formatting (no tables, columns, or graphics)

- Concise bullets (1-2 lines max)

- No objective statements or outdated elements"Cover Letters:

❌ May create generic, overly formal letters

❌ Could miss the connection between your experience and their needs

❌ Might be too long (should be 3-4 paragraphs max)

What to Do:

Add to your prompt:"Follow best practices for modern cover letters:

- Be concise (under 400 words)

- Use 'I' voice, conversational but professional

- Connect MY specific experience to THEIR specific needs

- Reference something specific about the company

- Show genuine interest, not generic enthusiasm"Interview Prep:

❌ May suggest rehearsed-sounding answers

❌ Could recommend overly detailed responses (interviews answers should be 1-2 minutes)

❌ Might miss the STAR method structure for behavioral questions

What to Do:

Add to your prompt:

"Please follow best practices for interview responses:

- Use STAR method (Situation, Task, Action, Result)

- Keep responses to 90-120 seconds when spoken aloud

- Focus on specific examples, not general statements

- End with the measurable result or lesson learned"🔍 Get Expert Review When It Matters

When to Seek Human Expertise:

✓ Final resume review – Career counselors, people in your target field, or professional resume reviewers can catch things AI misses

✓ Industry-specific standards – AI might not know your field’s norms (academic CVs, creative portfolios, technical resumes all have different expectations)

✓ Mock interviews – Practice with real humans who can give feedback on body language, tone, and pacing (not just content)

✓ Salary negotiation – Get advice from mentors in your field about realistic ranges and negotiation strategies

✓ Career direction – AI can suggest options, but humans who know you can give personalized guidance

Where to Find Expert Help:

- Career counselors at CTIC, libraries, or community organizations

- Alumni networks or professional associations

- Mentors in your target field

- Friends/colleagues who work in roles you’re targeting

✅ The Right Way to Use AI in Job Search

DO:

- ✅ Use AI to generate first drafts and inspiration

- ✅ Use AI to expand your thinking (job titles, company research, questions to ask)

- ✅ Use AI to save time on formatting and structure

- ✅ Use AI to practice and refine your messaging

DON’T:

- ❌ Copy-paste AI responses without significant editing

- ❌ Trust AI’s advice without verifying with current best practices

- ❌ Let AI replace human connection and networking

- ❌ Assume AI knows your industry’s specific norms

- ❌ Use AI to fake qualifications or experience you don’t have

💡 Final Wisdom

Remember: AI is a tool, not a solution.

It’s like spell-check—helpful for catching things you’d miss, but it doesn’t make you a good writer. You still need to:

- Bring your authentic self

- Build genuine relationships

- Develop real skills and experience

- Make thoughtful career decisions

- Trust your judgment about fit

The best job search combines:

- AI’s efficiency (research, drafting, brainstorming)

- Human expertise (career counseling, industry insight, mentorship)

- Your authenticity (unique story, genuine connections, personal judgment)

Questions? Need help making your AI-generated materials sound more like YOU?

Schedule a one-on-one appointment or bring your drafts to our next workshop!